Computer Vision (Nishino Lab)

In our laboratory, we study computer vision, the science aimed at establishing the theoretical foundation and practical implementation for granting computers the ability to see, whose findings also inform human vision studies. Armed with machine learning and optics, we aim to elevate computer vision to an intelligent perceptual modality for computers, instead of merely a means for efficient consumption of images and videos by humans.

Academic Staff

Ko Nishino

Professor (Graduate School of Informatics)

Professor (Graduate School of Informatics)

Research Interests

Visual information processing / Computer vision / Machine learning

Contacts

Research Bldg #9, South wing, Room S-303

TEL: +81-75-753-4891

https://vision.ist.i.kyoto-u.ac.jp/

Ken Sakurada

Associate Professor (Graduate School of Informatics)

Associate Professor (Graduate School of Informatics)

Research Interests

Computer Vision, Robotics, Autonomous Driving (Perception), Remote Sensing

Contact

Room I-302, 3rd Floor, South Wing, Research Building No.9

TEL: +81-75-753-5883

Spatial Context Sensing Research Project

Personal page

Ryo Kawahara

Lecturer (Graduate School of Informatics)

Lecturer (Graduate School of Informatics)

Research Interests

Computer Vision, Computational Photography, Physics-based Vision

Contact

Room I-303, 3rd Floor, South Wing, Research Building No.9

TEL: +81-75-753-3327

Introduction to R&D Topics

Perceiving People

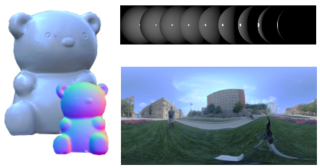

Perceiving Things

Seeing Better

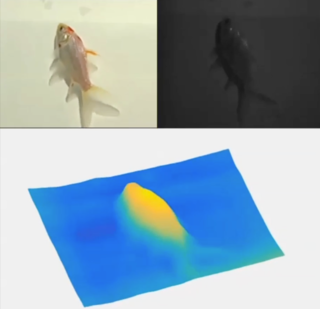

4. Understanding Spatial Context

For autonomous vehicles and robots to operate safely and autonomously in the real world, it is essential to sense the surrounding environment using sensors such as cameras and LiDAR, and to interpret the spatial context of the scene from the acquired data. Our lab focuses on developing robust spatial understanding systems that meet practical requirements, including real-time performance and privacy preservation, and that remain reliable even under limited sensing and computational resources. In particular, we study methods that leverage sensor data and temporal scene dynamics to enable accurate and efficient processing of camera and LiDAR information. For more details, please refer to this page